|

||||

|

|

|||

|

||||

|

Executive Summary: Sadly, you are on your own. None of the Internet Service

Providers (ISPs), Network Operations Centers (NOCs), or companies with

infected systems contacted were willing or claimed able to provide more

than a small modicum of assistance, even though their resources were

being used to attack my client. Law Enforcement (FBI) was contacted, and

a week later they had not even assigned an agent to the case. |

||||

|

If you have a lot of time on your hands, you can gather evidence, package it up into a form that each ISP wants, send it to their abuse department, and you'll never know if / when anything was done about the problem system as they all hide behind privacy policies. You will spend a lot of time for every single bot that is attacking you. Your efforts are futile as they'll hardly make a dent in the botnet and the effect it has on your network. Botnet masters, you have won! The internet is yours to do whatever you want with and steal whatever you can steal, and the people that can do something to thwart you just don't care. But I'll bet the botmasters knew that already, too! ISPs in America don't care if malicious traffic comes in from anywhere as they have no interest in blocking any of it. As a victim, you are on your own. But that doesn't mean you are helpless and defenseless! Shameless plug Not everything we did was successful. But ultimately, the client was up and had their business critical internet functionality running that fateful Sunday within hours of my arriving on-site and has ever since - practically zero network downtime during business hours as a result of this attack. Most of my articles are freebies, some of them quite popular. Search "Install Server 2003 Intel desktop board" or "Volume shadow copy broken" in Google - they help out > 400 people every month. There are some free hints and tips here, but if you found this because you are now a victim of a botnet my goal is to get into the trenches, pick up a weapon, and fight for your company like it was my own. In this instance, I'll give some valuable tidbits free. But some of the techniques I discovered I can't share for free. Computers, hardware, software, and networks have been my life since 1976 and I leverage all that knowledge when fighting for my clients. If you are being attacked, I'd love to help out. Contact information is in the contact page on the left side. What happened Sunday, 11/14/2010 At ~3:00 AM my client was knocked completely off the internet. From the outside, you couldn't see their website, couldn't VPN connect to their network, couldn't get email, etc. It looked like a down internet connection. They discovered the problem around 10 AM and contacted their ISP which was a Comcast Business class line, 20Mb down / 2 Mb up. They spent 2+ hours bouncing around voicemail @ Comcast trying to find someone in the business support services that would actually answer a phone. Once in touch with someone, they determined that as far as Comcast knew their web connection was fine. My client's network person was called and dispatched to the site to check on and possibly reboot routers, firewalls, etc. which seemed a likely problem source and do some diagnosis to see if something else might be wrong. I was looped in via the phone around 4:30 PM with their network person who informed me the firewall's CPU utilization was pegged at 100% and it was barely responding to requests from the LAN. With the Internet unplugged from the firewall, it became responsive and CPU usage dropped considerably. The only time I'd ever seen a Sonicwall react this way was when overloaded with traffic from inside a company where almost every PC they had was infected with a bot that was actively attacking other addresses on the internet, so I immediately suspected a bot. That is another story, which can be read here. Dispatched to the site, I arrived around 6:00 PM and after some preliminary checks and analysis, hooked up to do a quick packet sniff on the WAN line -- upon which I immediately determined they were the victim of a DDoS attack that was happening via a botnet whose bots were distributed all over our planet! This was different than my previous run-in with a botnet. This company was the victim of being attacked externally, not compromised and attacking outwards from internally. The packet capture I have is ~50 MB of data, and that data goes from 18:38:00 through 18:45:10 about 10,000 requests for data per minute and 6 MB/minute data continuously hammering on their server with requests. No wonder the CPU on the firewall was pegged and the rest of the web services were unresponsive! There was no room for any other data to squeeze through! The path forks in many directions here - I often find looking at multiple solutions to a problem in parallel to be a faster way to a solution than traveling only one path until it succeeds or fails. One path ("Plan A" - ISP blocks malicious bots / traffic): The client's network person got on the phone with Comcast and eventually ended up with a Tier 2 support person who got in touch with an engineer at the NOC (Network Operations Center) in an attempt to get these packets blocked. With a sorted list by number of packets and where they were coming from (IP address) helped Comcast attempt to target specific IPs. The biggest offender was an IP address at "Advanced Colocation Services" in Austin Texas. It took Comcast 20 minutes to have one IP address filtered and they were not willing to do much more than 5. That first and most offending address, 209.51.184.9 will come back to the story in the future. Here is a list of attacking bots, sorted by worst offender by number of packets first. The entire list has over 850 attacking systems in it! And that is just from the few minutes captured the first day! These show the reverse DNS address where available.

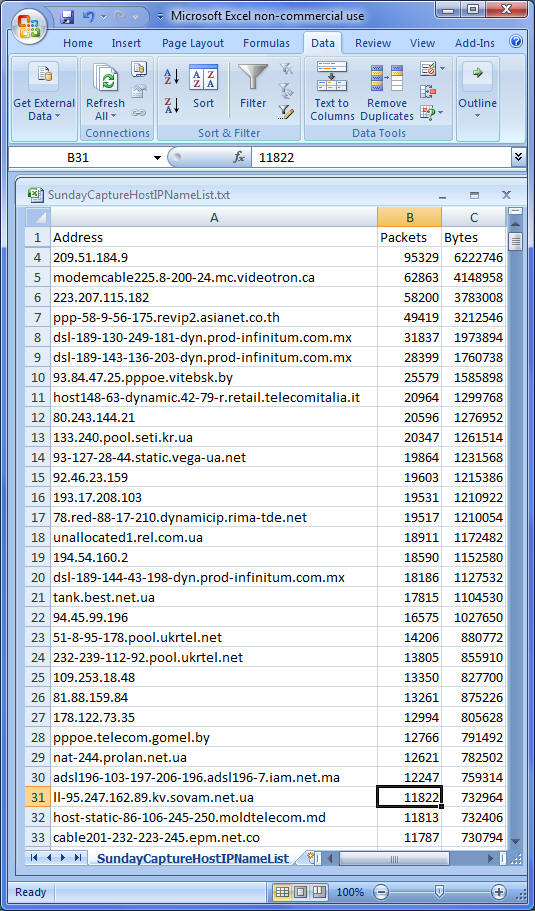

Unfortunately, after hours on the phone with Comcast, it was determined that the ability for NOCs to block packets is extremely limited. Though they blocked the first 5, it did not have any impact whatsoever. Our hope was if the biggest offenders are blocked, that might allow enough to let the firewall work on the remaining packets. It seemed like a viable solution initially. But in the end not even a dent in the traffic was made by Comcast. Score +1 for the bad guys. Another path, "Plan B", started around 7:00 PM Sunday The client happened to have a Sonicwall firewall, and while the client's engineer was working with Comcast I contacted Sonicwall. My question was this: I understood the device is overloaded with requests and only has certain CPU power inside. Given the situation that the site behind the firewall is under attack, what can be done to minimize the CPU requirements in such a situation, hopefully relieving the pressure and allow as much data through as possible? I anticipated the pain point would then move to the next layer, but as long as the firewall was the weak link no other legitimate data would get through. Waiting on hold for almost half an hour, at 7:30 PM I was auto-forwarded to a voice mail box where I had to leave a message and was then disconnected. They called me back at 8:30 PM and we spoke for half an hour. Another path, "Plan C": started around 7:30 PM Sunday, right after leaving a voicemail for Sonicwall. Sitting on hold gave me a lot of time to think about other things to do. Upon leaving the message, I departed the client's site to pick up some equipment I had in storage. During a different botnet incident, I had luck with a stupid wireless firewall / router, specifically an old Dlink DI-624 I had laying around. Briefly, the very smart Sonicwall is trying to process every packet, inspecting it for bad stuff, and applying a list of firewall & NAT rules, looking inside the packet for known malware signatures, ... in essence, trying to be a full-on packet inspecting firewall. This costs CPU power. The Dlink, being a much dumber device, has some port mapping and NAT functionality but doesn't do near the work on every packet the Sonicwall does. The DLink was more than happy to let packets drop on the floor if it needed to. So in an ironic twist, the stupid device actually worked better at allowing data through than the smart device did! Or at least this is what happened last time, so it was worth a shot here. I'd briefly looked for it prior to leaving for the client's site, but couldn't put my hands on it quickly. Now I went back and was able to locate it, then returned to the client's site. 8:30 PM, Plan B, Sonicwall CPU pressure relief I was searching for the equipment for plan C when Sonicwall support called me back. Their big suggestion was an option in the enhanced firmware to detect and defend against attacks. Unfortunately, the client wasn't yet running the enhanced firmware. They had a document describing the use of the new feature, along with another document talking about how to convert the existing configuration to one compatible with the enhanced firmware. With all the documents in hand, I was to call back once on site again. ~9:00 PM, plan C, DLink "less smart" router With the router / firewall and power supply in hand, I left for the client's site. 9:30 PM, back on site, plan B (Sonicwall CPU pressure relief) The client's Director of IT joined the party. In preparing to convert to the Enhanced firmware, the IT Director pulled every config screen into a word document as a CYA move. We then proceeded to follow the instructions sent by Sonicwall for converting the config and upgrading. Those instructions were wrong, they pointed us to a screen to extract data that did not exist in our firewall, and on another call said 'use the tool on a website' and when we followed the instructions there our firewall was NOT on the list of firewalls this tool could convert configurations to. I ended up creating a minimal configuration by hand with enough to see if turning this magic option on would relieve the pressure or not. The firewall, subject to the same data deluge from before, was more responsive to the GUI but was still overloaded, pegged at 100% CPU, and dropping data like crazy. So much so that it wasn't a viable solution. This was about 3-4 hours and of a couple of calls back to Sonicwall support when their instructions turned us down a blind alley as they were completely wrong. In frustration at none of the directions working, Sonicwall support said they would convert our configuration for us. By this time we already determined based on the manual minimal configuration I'd created that this was not going to work as a long term solution, and the firewall upgrade path was put on the back burner. Plan B was not viable. The new configuration file arrived on Monday ~1:30 PM, but that will be covered in its proper time sequence. Plan C, DLink "less smart" router During some of the breaks in Plan B's action, I was keeping the Plan C plate in the air and spinning. By using that router, I was able to use its "one public IP address NAT and port mapping" functionality to get SMTP mail flowing, Outlook Web Access (OWA), and a couple of other minor services. For encrypted email they use another product and different public IP address. This was temporarily eliminated as was their web site since each used unique public IP addresses and this DLink device couldn't handle multiple public addresses. VPN access was also left disabled for now. With the DLink "less smart" router installed and configured, my client now had a working internet connection as well as flowing email! With the DLink router in place and services established, I did a quick speed test - they were getting around 5.8 Mb/s downstream and 768Kb/s upstream, which isn't nearly what their 20 Mb/s downstream / 2 Mb/s upstream should be but was still better than the DSL line they disconnected a year or two earlier. It wasn't optimal, but it was better than a complete network outage. Sometimes the war effort needs the first fuse, not the best fuse. That is a story for another day. It would allow them to still do business over the net, though with some compromises like having to manually encrypt sensitive information. But given this was their 4th quarter and the busiest time of the year for the whole staff, it was as good as things were going to get under the circumstances. During various brainstorming sessions, other potential action plans were formulated. Those included: Plan D: Get more stupid routers Since stupid routers seemed better able to handle the traffic than the smart Sonicwall, a couple more stupid routers - one for each public address - might prove to work nicely. The problem is the router we know has the right balance of smart and stupid wasn't made anymore, and there is no specification for 'how stupid' or 'how smart' is the equipment. It would be a matter of trying a bunch of products out and if one worked, use it and if it didn't, put it back in the box and go on to the next one. But at less than $100 each, it is a small price for potentially a decent working temporary solution. Plan E: Move to another IP address block We were all at a loss to explain why this company had been targeted at all. Nobody could figure out any good reason. It is not like they were an Amazon or eBay or Google or government agency or anything significant. Nor were they an auto company or any of the other big industries here in Michigan. So if perhaps they were targeted in error just because or by IP address that used to be someone else more interesting, perhaps moving to a new address block would solve the problem. My opinion: If the bad guys were targeting by IP address, this would be a nice quick solution. If targeting by domain name, they were hosed. But it should be easy enough to change, so it was worth a shot. Plan F: Contact law enforcement This is a crime, but given lives weren't being lost or threatened, I wasn't optimistic. But you never know. Plan G: <proprietary> Plan H: <proprietary> Plan I: <proprietary> Plan J: Get ahold of the bot's code so some black box characterization of how it works and use that to cut it off at the command and control level. At 2:30 AM Monday, the IT director wrote a status to the employees telling them what was working, what wasn't yet, and that we had no idea why / who was behind this. I finally made it to bed around 3:15 AM on Monday morning, totally exhausted, frustrated at not knowing who or why, and wondering if there was more bad stuff lurking ahead of us. Even with all that, we felt good. Bruised and battered, but we had won this round. The company had internet access at decent speed, email was flowing both in and out, and their website wasn't an e-commerce platform but more of an information portal for clients and prospects to view. Business could continue mostly normally on Monday. The next critical functions were VPN access for the remote employees and the encrypted mail system, and the least important item - the website - we had a potential plan for. The week of Monday, 11/15/2010 There is a lot more from this next week. As I write, I'll publish updates. Law Enforcement In summary, the 'contact law enforcement / FBI' path went nowhere. Get and analyze the bot to neuter it - ISPs like Comcast, AT&T, etc. When I was battling the other botnet from within, I had access to the bot's executable code. You can read that article, but briefly I was able to run it in an "isolation booth" and see how it operates - and knowing how it contacted its master I could neuter it from within the company. Now in this case, the bots were external and not internal, so I could see them attacking but couldn't see how they were receiving their instructions. If I could get my hands on of a copy of the botnet code, I could run it locally, see how it is phoning home and receiving instructions, and potentially neuter them all by contacting the master location it phones home to. Over the next few days, I contacted various entities attempting to get them to cooperate. AT&T, Comcast, and other ISPs did not care. They were happy to attempt to inform the end user there was a problem if I went through a ton of hoops, but would not allow me to contact the end users that were compromised. In an effort to get my hands on the botnet code, I even offered to personally visit a few of the compromised homes on my own time and money, and offered to assist with cleaning their system for free. None of the ISPs would even present my offer! They all cited "privacy policy" even though they would not violate any privacy by sending my information to their customer. Get and analyze the bot to neuter it - Specific companies Advanced Colocation, AKA: Hurricane Electric (http://he.net) Via some very easy "Internet 100" level research, the worst offending IP address of 209.51.184.9 was traced back to a company called Hurricane Electric. So I contacted their technical support to get in touch with security and hopefully get them to cooperate and get a copy of the bot back to me. At first, they didn't believe me. So I sent them a network sniff captured but obscured the last octet of the IP address in order to not tell them exactly which system was compromised. My goal was to get them to cooperate - if they want to know which of their systems was now in control of the hackers, I would help them if they gave me a copy of the malicious code. Hurricane Electric never cooperated at all - they cared more about protecting the identity of the compromised server's owner than in having it cleaned. Their delays cost 3 days of possible remediation time, and ultimately I would discover the owners of the machine without Hurricane Electric's assistance. As it turns out, they were merely the rack space, power, and internet feed for the company that owned or leased the server itself. By doing my own research into the attacking IP address' system, I was able to determine who owned this computer! I was actually excited as certainly they would be interested in getting their system cleaned and helping out the company they'd been attacking for the last week. By poking at the offending IP address with various tools, I identified the server as belonging to a company called ZScaler. Now I'd never heard of them before, but with some network searching I got very optimistic - they are a company that touts themselves as a checkpoint for all internet traffic. In fact, being a web security company that secures traffic, if anyone is going to be interested in helping the security of the internet they would be! They had an automated submit by web open a trouble ticket system. On Monday 11/22/2010 @ 10:13 PM I opened a ticket, and 20 minutes later a tech named Paban called me back and we were talking about what I'd found. I was impressed! I sent them the same print screen of the sniffer traffic, again with the last octet obscured. I'm happy to help them, but I wanted a little help in return - again, I wanted the bot code. I'd help them if they would help me. Their VP of engineering got on the phone with me and said they would cooperate as much as they could. Unfortunately, they are basically a middle-man to web traffic, attempting to scrub it for anything bad before it goes into / out of their client company. They contacted their client, and their client discovered a couple of systems that were completely hosed up with malware. They were going to re-image the system. Thus destroying the bot and letting the entire botnet live to continue causing problems for innocent companies. But I give them credit - ZScaler at least tried. Empire Data Technologies, Inc. - EDTHosting.com - web hosting company This company was the first to take my information on what server was compromised, then confirmed they were infected, and then refused to give me the malicious code claiming it would be a violation of their privacy policy. They were the reason I didn't just give away the IP addresses of the compromised systems up front to anyone else. So I got a little nasty with them - I figured out which customers were hosted on their compromised servers and started making phone calls directly to their end customers, hoping to get them to OK my access into the system for the purposes of retrieving the malicious bot code. All of the customers I contacted weren't happy with EDTHosting, but nobody ever got back to me with any of the bot code. Conclusion from trying to get my hands on the bot: This is why I put in the executive summary: "To those running botnets: You have nothing to be afraid of here in the United States. ISPs here protect your bots from being discovered and cleaned by hiding behind a combination of security / privacy policies as well as inept reporting to the end user." What makes me sadder still - the Internet is this beautiful thing. Never have so many had access to such knowledge as readily available everywhere instantly as today. What used to take years to learn can now be followed by a complete novice in minutes by watching a how-to video someone else created. And bad people are going to take this wonderful thing we call the Internet and turn it into a vast wasteland where nobody wants to go because it is too dangerous. Bad people are making the Internet into the "CB Radio" of the 1970s or like spammers did with the internet newsgroups from the 1990s, into a junk pile. I hope we figure out that we shouldn't let the bad people win. As long as we sit around and do nothing about it and don't help each other fight the good fight, the bad people will win. Things I've discovered since: There are companies where you can rent your own botnet! Yes, now any idiot with a credit card can rent services of a botnet and take down your favorite company for not that much money. Of course, you are giving your money to a criminal enterprise. But here is some light reading for the really curious: http://www.zdnet.com/blog/security/study-finds-the-average-price-for-renting-a-botnet/6528 To be written about when I can:

|

||||